Deploy Cluster Kubernetes High Availability Menggunakan Ansible dan HAProxy

Artikel ini adalah panduan lengkap dan praktis untuk membangun Cluster Kubernetes High Availability (HA) menggunakan Ansible dan HAProxy. Semua langkah dilakukan secara otomatis melalui Ansible Playbook, menjadikannya pilihan ideal bagi pemula hingga profesional DevOps yang ingin menyiapkan infrastruktur Kubernetes yang siap produksi.

🧠 Apa Itu Kubernetes High Availability (HA)?

Kubernetes HA adalah pendekatan arsitektur di mana lebih dari satu control plane (node master) dijalankan secara bersamaan untuk menjamin ketersediaan layanan. Jika salah satu node mengalami kegagalan, node lainnya akan secara otomatis mengambil alih, sehingga aplikasi tetap berjalan tanpa gangguan.

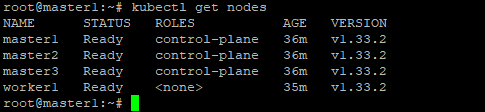

Dalam tutorial ini, kamu akan membangun:

- 3 node master

- 1 node worker

- 1 node load balancer (HAProxy)

- Container runtime: containerd

- Otomatisasi dengan Ansible roles

📁 Struktur Direktori Project Ansible

.

├── inventory

│ └── hosts.ini # Daftar IP dan role tiap host

├── playbook.yml # Playbook utama untuk deploy

├── reset-k8s.yml # Playbook untuk reset cluster

└── roles # Role Ansible sesuai fungsi

├── common # Konfigurasi umum tiap host

├── containerd # Install containerd

├── cri-tools # Install crictl

├── kubernetes # Install kubelet, kubeadm, kubectl

├── lb-haproxy # Install dan konfigurasi HAProxy

├── master-init # Inisialisasi control plane di master1

├── master-join # Join control plane di master2 & master3

└── worker-join # Join worker ke cluster

📌 Konfigurasi Inventory: inventory/hosts.ini

[master]

master1 ansible_host=206.237.97.101 hostname=master1

master2 ansible_host=206.237.97.103 hostname=master2

master3 ansible_host=206.237.97.104 hostname=master3

[worker]

worker1 ansible_host=206.237.97.102 hostname=worker1

[lb]

lb ansible_host=206.237.97.76 hostname=lb

[all:vars]

ansible_user=UserKamu

ansible_ssh_pass=PasswordKamu

lb_ip=206.237.97.76

📝 Catatan:

- Gunakan user UserKamu (atau user non-root lainnya) yang memiliki akses sudo.

- Hindari menggunakan

rootlangsung dan password di dalam file. - Pastikan SSH key-based authentication sudah disiapkan.

📜 Playbook Utama: playbook.yml

- name: Deploy Kubernetes Cluster

hosts: all

become: yes

roles:

- common

- containerd

- cri-tools

- kubernetes

- { role: lb-haproxy, when: "'lb' in group_names" }

- { role: master-init, when: "inventory_hostname == 'master1'" }

- { role: master-join, when: "inventory_hostname != 'master1' and 'master' in group_names" }

- { role: worker-join, when: "'worker' in group_names" }

🔁 Playbook Reset: reset-k8s.yml

- name: Reset Kubernetes Cluster

hosts: all

become: true

tasks:

- name: Reset kubeadm

shell: kubeadm reset -f

- name: Remove Kubernetes config

file:

path: "{{ item }}"

state: absent

with_items:

- /etc/cni/net.d

- /root/.kube

- /etc/kubernetes

- /var/lib/etcd

- /var/lib/kubelet

- /var/lib/cni

- /run/containerd

- name: Reload containerd and kubelet

systemd:

name: "{{ item }}"

state: restarted

enabled: yes

loop:

- containerd

- kubelet

- name: Clear iptables rules

shell: iptables -F

ignore_errors: yes

- name: Clear IPVS rules

shell: ipvsadm --clear

ignore_errors: yes

- name: Clean network interfaces

shell: ip link delete cni0 || true

ignore_errors: yes

🔐 Tips Keamanan: Backup semua konfigurasi penting jika cluster sudah digunakan.

🔧 Role common/tasks/main.yml

- name: Install required system packages

package:

name:

- tar

- wget

- curl

- iproute2

- iptables

state: present

- name: Set hostname

hostname:

name: "{{ inventory_hostname }}"

- name: Overwrite resolv.conf

copy:

dest: /etc/resolv.conf

content: |

nameserver 8.8.8.8

nameserver 1.1.1.1

- name: Enable kernel modules

copy:

dest: /etc/modules-load.d/k8s.conf

content: |

overlay

br_netfilter

- name: Load kernel modules

modprobe:

name: "{{ item }}"

loop:

- overlay

- br_netfilter

- name: Set sysctl params

copy:

dest: /etc/sysctl.d/k8s.conf

content: |

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

- name: Reload sysctl

command: sysctl --system

- name: Disable swap

shell: |

swapoff -a

sed -i '/ swap / s/^/#/' /etc/fstab

🔧 Role containerd/tasks/main.yml

- name: Install containerd

apt:

name: containerd

state: present

- name: Configure containerd

shell: |

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

- name: Set SystemdCgroup

replace:

path: /etc/containerd/config.toml

regexp: 'SystemdCgroup = false'

replace: 'SystemdCgroup = true'

- name: Restart containerd

systemd:

name: containerd

enabled: yes

state: restarted

🔧 Role cri-tools/tasks/main.yml

- name: Download crictl

get_url:

url: https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.33.0/crictl-v1.33.0-linux-amd64.tar.gz

dest: /tmp/crictl.tar.gz

- name: Extract crictl

unarchive:

src: /tmp/crictl.tar.gz

dest: /usr/local/bin

remote_src: yes

🔧 Role kubernetes/tasks/main.yml

- name: Install prerequisites

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gpg

state: present

update_cache: yes

- name: Create keyring directory

file:

path: /etc/apt/keyrings

state: directory

mode: '0755'

- name: Add Kubernetes APT key

shell: |

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | \

gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

args:

creates: /etc/apt/keyrings/kubernetes-apt-keyring.gpg

- name: Add Kubernetes APT repository

copy:

dest: /etc/apt/sources.list.d/kubernetes.list

content: |

deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /

- name: Install Kubernetes packages

apt:

name:

- kubelet

- kubeadm

- kubectl

state: present

update_cache: yes

- name: Enable and start kubelet

systemd:

name: kubelet

enabled: yes

state: started

🔧 Role lb-haproxy/tasks/main.yml

- name: Install haproxy

apt:

name: haproxy

state: present

update_cache: yes

- name: Configure haproxy

copy:

content: |

global

log /dev/log local0

log /dev/log local1 notice

daemon

defaults

log global

mode tcp

option tcplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

frontend kubernetes-frontend

bind *:6443

default_backend kubernetes-backend

backend kubernetes-backend

balance roundrobin

option tcp-check

server master1 206.237.97.101:6443 check

server master2 206.237.97.103:6443 check

server master3 206.237.97.104:6443 check

dest: /etc/haproxy/haproxy.cfg

- name: Restart haproxy

service:

name: haproxy

state: restarted

enabled: yes

🔧 Role master-init/tasks/main.yml

- name: Initialize Kubernetes control plane on master1

shell: |

kubeadm init \

--control-plane-endpoint={{ lb_ip }}:6443 \

--upload-certs \

--pod-network-cidr=192.168.0.0/16 \

--cri-socket unix:///run/containerd/containerd.sock

register: kubeadm_init

failed_when: kubeadm_init.rc != 0 and "already initialized" not in kubeadm_init.stderr

- name: Get discovery token CA cert hash

shell: |

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | \

openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | \

sed 's/^.* //'

register: ca_cert_hash_result

when: inventory_hostname == "master1"

- name: Set ca_cert_hash as a fact

set_fact:

ca_cert_hash: "{{ ca_cert_hash_result.stdout }}"

when: inventory_hostname == "master1"

- name: Generate certificate key

shell: kubeadm init phase upload-certs --upload-certs | tail -n1

register: cert_key_result

when: inventory_hostname == "master1"

- name: Set cert_key as a fact

set_fact:

cert_key: "{{ cert_key_result.stdout }}"

when: inventory_hostname == "master1"

- name: Create kubeadm join token

shell: |

kubeadm token create --print-join-command

register: join_command_result

when: inventory_hostname == "master1"

- name: Set join_command as a fact

set_fact:

join_command: "{{ join_command_result.stdout }}"

when: inventory_hostname == "master1"

- name: Set up kube config for kubectl

shell: |

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

chown root:root /root/.kube/config

📌 Penting: Sesuaikan Jaringan Pod

Di role master-init, konfigurasi --pod-network-cidr=192.168.0.0/16 harus dipastikan tidak bentrok dengan jaringan lokal atau VPN Anda.

Jika kamu menggunakan jaringan 192.168.0.0/16 di LAN, bisa diubah ke:

--pod-network-cidr=10.244.0.0/16 atau yang lainya.

🔧 Role master-join/tasks/main.yml

- name: Create kubeadm join command script for control plane

copy:

dest: /tmp/kubeadm_join_cmd.sh

content: |

#!/bin/bash

{{ hostvars['master1'].join_command }} \

--discovery-token-ca-cert-hash sha256:{{ hostvars['master1'].ca_cert_hash }} \

--control-plane \

--certificate-key {{ hostvars['master1'].cert_key }} \

--cri-socket unix:///run/containerd/containerd.sock

mode: '0755'

- name: Run join command to join control plane

shell: sh /tmp/kubeadm_join_cmd.sh

- name: Set up kube config for kubectl

shell: |

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

chown root:root /root/.kube/config

🔧 Role worker-join/tasks/main.yml

- name: Create kubeadm join command script for worker

copy:

dest: /tmp/kubeadm_join_cmd.sh

content: |

#!/bin/bash

{{ hostvars['master1'].join_command }} \

--discovery-token-ca-cert-hash sha256:{{ hostvars['master1'].ca_cert_hash }} \

--cri-socket unix:///run/containerd/containerd.sock

mode: '0755'

- name: Run join command script on worker node

shell: sh /tmp/kubeadm_join_cmd.sh

🌐 Langkah Terakhir: Deploy CNI Plugin

Tanpa CNI (Container Network Interface), pod tidak akan bisa berkomunikasi. Pilih salah satu CNI berikut untuk di-deploy setelah cluster selesai dibuat:

✅ Install Calico

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/calico.yaml

✅ Atau install Flannel

kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

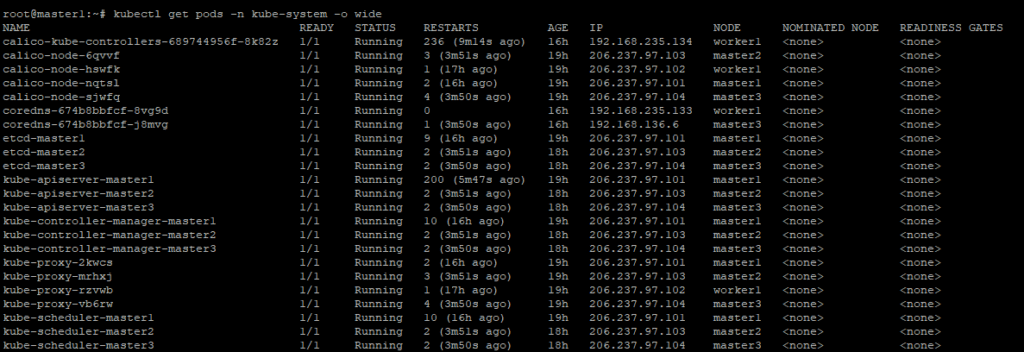

🔍 Verifikasi

Setelah install CNI, pastikan semua pod berjalan dengan baik:

kubectl get pods -n kube-system

Semua status harus Running atau Completed

🎉 Sekarang kamu sudah punya Kubernetes HA Cluster yang resilient dan siap digunakan! Jangan lupa install CNI plugin agar cluster dapat digunakan secara penuh. Selamat mencoba! 🎯

🎯 Kesimpulan

Dengan mengikuti panduan ini, kamu telah:

- Meng-automasi deploy cluster Kubernetes HA

- Menggunakan metode best practice: HAProxy, kubeadm, Ansible

- Memahami struktur playbook Ansible dan perannya

- Menghindari konfigurasi berbahaya (seperti hardcoded password)

👉 Jangan lupa install CNI plugin agar cluster siap digunakan! Semoga sukses dan siap menuju production dengan Kubernetes 💪